Snapchat’s looking to ensure greater transparency on content created by its generative A.I. tools, with new tags and indicators that will now be appended to generated images.

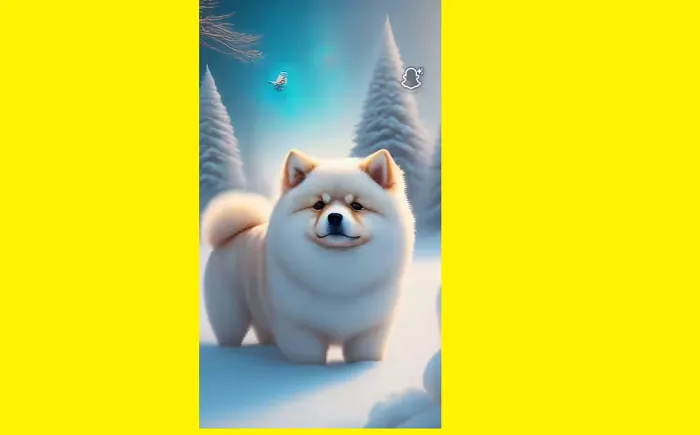

As you can see in this image, Snapchat’s adding a new, custom ghost icon to images generated in the app, which will be included on any content that you download via Snap’s gen A.I. features.

As explained by Snap:

“Some generative AI-powered features, like Dreams and AI Snaps, allow you to create or edit images. When you export or save a generated image to Camera Roll, a watermark of a Snap Ghost with sparkles may be added to those images. The purpose of these watermarks is to provide transparency that the image was created with generative AI and is not real or based on real events, even if it is a realistic style.”

Snapchat also notes that it already uses various indicators within the app to signal AI tools, including “A.I.” tags on Lenses:

“For example, when a Snapchatter shares an AI-generated Dreams image, the recipient sees a context card with more information. Other features, like the extend tool which leverages AI to make a Snap appear more zoomed out, are demarcated as an AI feature with a sparkle icon for the Snapchatter creating the Snap.”

The new indicators will provide external markers as well, which will help to better inform viewers that these are not real images.

In addition to the new tags, Snap has also published an overview of its A.I. features and rules, which includes guidance as to how and where A.I. creations can be shared.

Which includes interesting notes like:

“Do not assume generative AI outputs are true or depict real events. Generative AI can and will make mistakes, and as a result, outputs may be incorrect, inappropriate, or wrong.”

Seems like a fairly broad catch-all to cover Snap in case of issues.

Snap also notes that removal of its new “Ghost with sparkles watermark” is a violation of its Terms, another measure that could help Snap distance itself from future issues.

Snap, like all companies that are implementing generative A.I. elements, has had to deal with various issues with its outputs, with youngsters initially able to get advice on how make drugs, how to conceal alcohol, and more, via its MyAI chatbot.

Snap has since worked to update the tool, with more safeguards and protections. But as this technology evolves, there are going to be misuses and mistakes, and people are going to try and use these elements to mislead and misinform, for varying purpose.

Which is why transparency is key, and these new measures provide some additional display and enforcement tools for Snap’s team.

They won’t be a cure for all of the related issues, but it’s another way to ensure greater transparency.